Archive for the 'Programming' category

Getaway! Hack

January 14, 2025There are a few go-to games for the Atari 800 that I play frequently. One of them is a cops and robbers game called Getaway! Without getting into too much detail around the game play, you play the robber who canvases the city looking for treasure to steal and unmarked white vans to hi-jack and loot. The cops are roaming the city, essentially randomly, until you perform a specific action or a game play event takes place. One such action is robbing the white van, and an event could be the game transitioning to dusk or night fall. Once that happens, the cops immediately seek you out and give chase. As your score gets larger, they also become more suspicious and start chasing you sooner.

I find that the game gets considerably more difficult around 7000 points and I have yet to break the 8000 point barrier as of this writing.

As you progress through the game, there are different treasures that appear, giving different point values when you collect them. I wanted to know how many treasures there were in the game and what happens as you get to higher scores. Also, I have often thought that the game would be fun for young kids, if the police did not act so aggressively. They would have a blast driving around and collecting the treasure. Note that the game isn’t fun for an adult when this change is made, but a four year old would probably love it. If you find the modified game entertaining, you may not want to post that in the comments.

The author of the game, Mark Reid, released the source code to the game in 2017 on Github. It is written in assembly language but some of the basics can be puzzled out if you are familiar with a few core instructions. Like many computers of the era, the system is driven by a 6502 processor. Unlike many systems, it is clocked at a higher frequency than many of its competitors, running at a blazing 1.79 MHz for NTSC systems.

At any rate, I wanted to modify the game so that the cops would always remain in their random, passive state. The code refers to this state as “dumb” and the opposite behaviour as “smart.” To change it, just modify this piece of code:

COP031 CPX #4 ;Van is dumb

BCS DUMB

LDA RANDOM ;if SKILL>RANDOM

CMP SKILL ; COPs smart

BCC SMART

To this:

COP031 CPX #4 ;Van is dumb

BCS DUMB

LDA RANDOM ;if SKILL>RANDOM

CMP SKILL ; COPs dumb

BCC DUMB

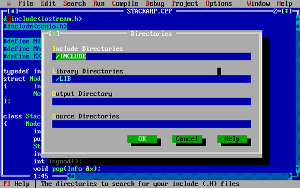

Assemble the code. I think I needed to fix a few issues first, such as disabling debug mode which is found at the top of the module. If you leave the debug mode set to ‘2’, it will produce a weird looking map by default.

;

; compilation flags

;

DEBUG = 0 ; nonzero includes debugging code & sets debug map

There may also have been a few include paths that needed to be changed as well. At any rate, once those changes are done the assembler will produce a “getaway.exe” file. It is not a Windows executable file so don’t try and run it; just rename it to “getaway.xex” since that seems to be the format. I copied the file to my Lotharek drive and played it on the Atari, you could also choose to fire it up in an emulator.

Categories: Atari 8-bit, Games, Programming

No Comments »

FreeDOS on an eBox 2300SX-JSK

December 16, 2023If you want USB thumb drives to work on FreeDOS out of the box on this hardware platform, make sure that the BIOS boot order has the USB drive before the Compact Flash drive. There is also no hot plug support, so insert your thumb drive into the device before you boot. Lastly, I would hazard guess that not every thumb drive will work. I used a Kingston DataTraveler 1GB USB stick.

Categories: FreeDOS, Hardware, PC, Programming

No Comments »

Epic Games Launcher Stuck

August 19, 2023While downloading the update to Unreal Engine 5.2.1, the launcher appear to be stuck at 29%. Following these steps cleared that up.

Categories: Programming, Unreal Engine

No Comments »

Book Review

May 8, 2023Let’s talk about this book written by Bob Flanders and Michael Holmes in 1993 entitled “C LAB NOTES”.

The title hints that this may be a book about programming in the C language. Spoiler: it is not a book about how to program in C, even though it uses the C language. Instead, it provides you with several interesting, examples about how to tack real world problems (at least, problems that existed in 1993). Here is a run down of the topics being explored:

- Setting the system clock via modem and the United State Naval Observatory’s cesium clock.

- Collecting program statistics around interrupts used, video modes, disk access.

- Running programs on other nodes via Novell NetWare; sending messages is also explored.

- Interacting with laser printers.

- Phone dialing using a modem.

- Synchronizing directory contents.

- Automatically retrieving the latest files from multiple directories.

- Analyzing disk structure, such as clusters and sectors.

- Managing your appointments with a custom calendar.

This was such a fascinating book back in the day. I had limited income to spend on expensive computer programming texts. Many of the programming books that were available in my local bookstore tended to focus on abstract problems that served to introduce foundational concepts. It was so refreshing to see these sorts of problems being explored. Even today, so much literature is written around how a specific technology works, but so few products are written where the author just assumes you know something about the technologies being used. If they used those assumptions constructively, they could dive straight into interesting problems. It would imagine it might be more difficult to find a publisher who would back you on writing a book like this today; they may look at the material and say it would be too hard for most readers to understand, and therefore would not sell particularly well. They would probably be right on both counts.

In any case, I think we can all breathe a collective sigh of relief that advanced books like these are being quickly fazed out and replaced by much more mainstream titles. After all, once a programmer knows how to sort a linked list or draw a simple scene in OpenGL or Unity, there is really nothing else worth exploring.

Categories: Books, DOS, Programming

No Comments »

MS-DOS is Cross Platform

May 2, 2023Cross platform programming is a labour of love. It’s also requires a large time investment to get it right, so you should only do it if you really need to. There are many technologies available to help you on that journey; if you are building an application, then you’ll probably take a different path than if you are programming a game, and it’s the latter scenario that I would like to dig into a bit.

Let’s suppose you want to program a small game that doesn’t require easy access to 3D hardware, fancy input devices, or the Internet. Okay, I probably lost most of you right there, but for those that are a tiny bit intrigued, let’s continue.

I have a lot of nostalgia around the MS-DOS platform. It was not the first platform on which I began programming, that honour belongs to the Atari 800 XL, but it was still a lot of fun to use and explore. Compared to desktops nowadays, it is obscenely limited, but back in the 80s/90s it felt quite the opposite. MS-DOS is a 16-bit operating system and native access to memory beyond 640 kilobytes was the stuff of fantasy. Luckily, that limitation was relatively short lived, and you could access heaps of memory (get it?) by leveraging DOS extenders, such as DOS/4G, DOS/32, or CWSDPMI. If they packed the right set of low level features, these run-time utilities were all enabled by your friendly 386 CPU, and allowed the adventurous programmer to enter protected mode and access much more memory. It is worth mentioning that 286 CPUs did support protected mode but they lacked important features, so it was never heavily adopted

Once you jumped the hurdle of available memory, there were other lions that would be programmers needed to tame. They also had cope with performance differences between CPUs (with or without co-processors), sound hardware, hard-drive storage (or lack thereof), video hardware, and input device support. Phew. As a result of all this variability, many MS-DOS compatible games were faced with cross-platform challenges on the same, err, platform.

Unfairly, this wasn’t an issue when faced with game console development. The company promoting a console could always choose to add new hardware, such as steering wheel for racing games or new ways of visualizing content, or even additional performance, but it couldn’t do so in a way that would break existing applications. Note that this isn’t necessarily true for consoles built in the last 20 years. For these machines, the hardware remains compatible, but the software involved could change a lot, which would require game developers to retool their application from time to time. That train of thought is inconvenient right now, so we won’t talk about that messy bit of reality for now.

During the 8- and 16-bit era, game consoles provided a stable environment to build games. This stability lead to lower production costs and faster delivery times. In reality, many companies chose to release their games on several different platforms which dirties the delivery waters a bit. We will sweep these software development complexities under the rug as well since the porting strategies used did not impact their code-base in the same way as cross-platform development would affect their projects today. For example, many companies had distinct implementations for each platform, and code reuse was the exception rather than the rule.

Ironically, given the eccentric nature of MS-DOS in its heyday which lead to all sorts of programming headaches, it’s surprising that using a current incarnation of MS-DOS today produces such a stable environment. All you need is the right level of abstraction. Sure, you could always choose to support MS-DOS and all of its gory hardware configurations, or you could target one configuration and enjoy all of that sweet compatibility. What is this magical solution? Well, this paradise of splendour can be had by leveraging emulators like DOSBox. Yes, the solution to the fragmented environment that is an MS-DOS gaming machine is the venerable DOSBox. It is an excellent way to release your game on dozens of platforms without writing a lick of extra code. Want to run your game on Linux? No problem. What about Mac? No problem. Any console with an open development environment and suitable performance characteristics can (and likely does) support DOSBox, or one the excellent downstream projects like DOSBox-X. This means, your game can run on all of those supported platforms too. Go on, grab some MS-DOS development tools and get coding!

Categories: DOS, Programming, Retro

No Comments »

DOS Software Development Environment

December 12, 2014I love writing software for Microsoft’s DOS. I didn’t cut my teeth programming on this platform, that was done on an Atari 800 XL machine. However, it was on this platform that I was first exposed to languages like C and Assembly Language, and thus sparked my torrid love affair for programming which lasts to this day. The focus of this post is about DOS software development and remote debugging.

If you have done any development for iOS or Android, then you have already been using remote debugging — unless you are some kind of masochist who still clings to device logging even when it is not necessary. The basic concept is that a programmer can walk through the execution of a program on one machine via the debugger client, and trace the execution of that program through a debug server running on another machine.

The really cool part of this technology is that it’s available for all sorts of platforms, including DOS! Using the right tool chain, we can initiate a remote debugging session from one platform (Windows XP in this case), and debug our program on another machine which is running DOS! The client program can even have a relatively competent UI. For this project, the toolset we are going to use is available through the OpenWatcom v1.9 project, and the tools found inside that wonderful package will allow us to write 16-bit or 32-bit DOS applications and debug them on an actual DOS hardware target! In addition, we can apply similar techniques but this time our server can be hosted within a customized DOSBox emulator, which is also really cool since it allows you to debug your code more easily on the road.

The first scenario is the one I prefer, since it is the faster of the two approaches, but before we get into the details how to set this up, let’s consider some of the broader requirements.

You’ll need two machines for scenario number one. The DOS machine will need to be a network enabled machine, meaning it should have a network interface card and a working packet driver. I would recommend testing your driver out with tools like SSH for DOS, or the PC/TCP networking software originally sold by FTP Software. In order to use the OpenWatcom IDE, you’ll need a Windows machine. I use VirtualBox and a Windows XP Professional installation; my host machine is a Macbook Pro running Max OS X 10.7.5 with 4 GB of RAM.

The second scenario involves using the same virtual machine configuration, but running the DOSBox emulator within that environment. You will need to use this version of the DOSBox emulator, which has built-in network card emulation. They chose to emulate an NE2000 compatible card for maximum compatibility, and also because the original author of the patch was technically familiar with it. After installation, you’ll need to associate a real network card with the emulated one, and then load up the right packet driver (it comes bundled with the archive).

For reference, the network interface card and the associated packet driver I am using on the DOS machines is listed below:

- D-Link DFE-538TX

These are the steps I have used to initiate a remote debugging session on the DOS machine:

- Using Microsoft’s LAN Manager, I obtain an IP address. For network resolution speed and simplicity, I have configured my router to assign a static IP address using the MAC address of my network card; below is the config.sys and autoexec.bat configurations for my network

AUTOEXEC.BAT @REM ==== LANMAN 2.2a == DO NOT MODIFY BETWEEN THESE LINES == LANMAN 2.2a ==== SET PATH=C:\LANMAN.DOS\NETPROG;%PATH% C:\LANMAN.DOS\DRIVERS\PROTOCOL\TCPIP\UMB.COM rem - By Windows 98 Network - NET START WORKSTATION LOAD TCPIP rem - By Windows 98 Network - NET LOGON michael * @REM ==== LANMAN 2.2a == DO NOT MODIFY BETWEEN THESE LINES == LANMAN 2.2a ==== CONFIG.SYS DEVICEHIGH=C:\LANMAN.DOS\DRIVERS\PROTMAN\PROTMAN.DOS /i:C:\LANMAN.DOS DEVICEHIGH=C:\LANMAN.DOS\DRIVERS\ETHERNET\DLKRTS\DLKRTS.DOS DEVICEHIGH=C:\LANMAN.DOS\DRIVERS\PROTOCOL\TCPIP\NEMM.DOS DEVICEHIGH=C:\LANMAN.DOS\DRIVERS\PROTOCOL\TCPIP\TCPDRV.DOS

- Load the D-Link Packet driver

- I load a TSR program, which I have built from a Turbo Assembly module, which can kill the active DOS process. I do this because the TCP server provided with OpenWatcom v1.9 does not exit cleanly all of the time, and will often lock up your machine. In the end, your packet driver may not be able to recover anyway, and you will need to reboot the machine, unless you can find a way to unload it and reinitialize. Incidentally, the packet driver does have a means to unload it, but when I attempt to do so after the process has been killed, it reports that it cannot be unloaded. The irony of the situation will make you laugh too, I am sure.

- Navigate to my OpenWatcom project directory, then I start the TCP server which uses the packet driver and your active IP address to start the service. The service will wait for a client connection; in my case, the client is initiated from my Windows XP virtual machine using the OpenWatcom Windows IDE.

- Ensure that the values for “sockdelay” and “datatimeout” are both “9999”, and make sure the “inactive” value is “0” in your WATTCP.CFG file. Even though the documentation says that a value of “0” for the “datatimeout” field is essentially no timeout, I did not find that to be the case. The symptom of the timeout can be onbserved when you launch the debug session from the OpenWatcom IDE and you see the message “Session started” on your DOS machine, but then the IDE reports a message the the debug session terminated.

These are the steps for the DOSBox emulator running within the Windows XP guest installation:

- Install the special network enabled build of DOS Box mentioned above;

- Fire up the NE2000 packet driver  (c:\NE2000 -p 0x60);

- Start the TCP service

- Note that I configured a static IP address on my router using the Ethernet address reported by the packet driver. You should not be able to ping that address successfully until the TCP server is running in DOSBox. While the process worked, I found the time it took for the session to be established and the delay between debug commands to be monstrously slow (45-90 seconds to establish the connection, for example) and as a result, made this solution unusable.

While working on a project, it can be really useful to create the assets on a modern machine and then automatically deploy them to the DOS machine without needing to perform a lot of extra steps. It can also be useful to have the freedom to edit or tweak the data on the DOS machine without needing to manually synchronize them. The solution which came immediately to my mind was a Windows network share. This is possible in DOS via the Microsoft LAN Manager software product and has been discussed before in a previous post.

Categories: DOS, Game Development, Programming, Retro

No Comments »

Building Wolfenstein 3D Source Code

June 16, 2014Way back on Feb 6, 2012, id Software released the source code to Wolfenstein 3D — 20 years after it had already been written. The source code release does not come with any support or assets from the originally released game. In fact, id Software is still selling this title on various Internet stores like Steam. I played around with a DOS port of the DOOM source code quite some time ago, but I had never bothered to try and build its ancestral project. Until now!

As it turns out, it’s actually quite straight-forward with only a minor hiccup here and there. The first thing you’ll need is a compiler, that almighty piece of software that transforms your poorly written slop into a form that the operating system can feed to the machine. For this project, the authors decided to settle on the Borland C++ v3.0, but it is 100% compatible with v3.1. I don’t know if more recent compilers from Borland are compatible with the project files, or the code present in the project produces viable targets, so good luck if you decide to make your own roads.

As per the details in the README file, there are a couple of object files you will want to make sure don’t get deleted when you perform a clean within the IDE:

- GAMEPAL.OBJ

- SIGNON.OBJ

You can open up the pre-built project file in the Borland IDE, and after tweaking the locations for the above two files, you should be able to build without any errors. The resulting executable can then be copied into a working test directory where all of the originally released assets are located, I believe my assets were from the 1.2 release.

There are also a few resource files you must have in order for the compiled executable to find all of the right resources. According to legend, the various asset files were pulled from a sprinkling of source formats and assembled into “WL6” resource files. A utility called I-Grab, which is available via the TED5 editor utility, produced header files (.H) and assembler based (.EQU) files from that resource content which allowed the game to refer to them by constant indices once the monolithic WL6 resource files were built. There are annotations in the definition files, using the “.EQU or .H” extension, with a generated comment at the top which confirms part of that legend.

The tricky part in getting the game to run properly revolves around which resource files are being used by the current code base. The code refers to specific WL6 resource files, but locating those resource files using public releases of the game can be very tricky because those generated files have changed an unknown number of times. Luckily, someone has already gone through the trouble of making sure the graphics match up with the indices in the generated files. The files have conveniently been assembled and made available here:

After unpacking, you’ll need to copy those to the test directory holding the registered content for the game. Note that without the right resource files, the game will not look right and will suffer from a variety of visual ailments, such as B.J. Blazkowicz’s head being used as a cursor in the main menu, or failing to see any content when a level is loaded.

Categories: DOS, Game Development, Games, Programming, Retro

No Comments »

Space Invaders in SVG!

May 29, 2014I wrote a little space invaders game in SVG (Scalable Vector Graphics) several years ago. It plays sound effects and has a few of the essential features within the game. I have recently modified it to work on Google Chrome browsers, since it was originally built to run within Adobe’s SVG Viewer plug-in which could only run on Windows 98 or XP platforms.

It’s a great game for people who are new to programming to get their feet wet and hack around with it. Some of the core concepts needed to modify the game to any great extent would be a rudumentary knowledge of JavaScript, SVG, and DOM level programming; you can run the game in the browser directly, there is no need to embed it within an HTML page although that is certainly possible, and you also download it here. You can move the player using the ‘A’ and ‘D’ keys, and fire with the ‘S’ key. The space bar pauses and unpauses the game.

Here is a little excerpt taken from the Space Invaders Atari 2600 manual:

Welcome to Space Invaders! Before you can begin playing, the first step is to place your cursor over the docking rectangle in the upper-left hand corner. Once the cursor has been positioned over the rectangle, click it and it should change color. Lift your hand from the mouse and you’re ready to play!

You are a recent enlistee in the Earth Defense Corps. For the past six weeks you’ve undergone grueling and intensive training. Now you stand at attention, nervously anticipating the most critical section of your training…

“Okay, kid, you’re on!” barks your commanding officer.

Quickly you climb into a laser tank. A second enlistee follows you. You each settle into deep, leather seats. With a soft whirring sound, the automatic hatch cover closes overhead. As your eyes adjust to the dim light of the laser capsule, you begin to make out the controls. Mentally, you check off each knob, dial, button, and display. For the next several hours you and the other enlistee with operate these controls to defend your planet in an attack simulation. The screen in front of you lights up. A column of bomb-dropping aliens advances toward you. What next? For a second your mind goes blank. Have you learned your lessons well? No time to refer to the manual now. Your commanding officers are watching and it’s your show.

Your tasks are to stop the invaders from landing on your territory; avoid enemy bombs; and score as many points as possible. The simulation ends when you lose all your lives or when any invader lands on your planet. If you destroy all 36 space invaders before they touch your planet, a new set of invaders will appear on the screen. Each new set of invaders will move a little faster than the previous set.

You begin each game with three shields. Initially, you are safe behind the shields. But as you and the enemy hit the shields with lasers and bombs, they become damaged and eventually disappear altogether. As the space invaders come close to the shields in their descent toward you, the shields will be destroyed and your only hope is to destroy the remaining invaders before it’s too late…

Categories: Games, Programming, Retro

No Comments »

Borland Turbo C++ v3.0

February 13, 2013In many ways I found my departure from C to C++ to be less than stellar. Sure, it brought to the table new paradigms and new capabilities, all of which were bright and shiny to new and experienced programmers alike, but hidden away in a pocket covered in lint was an even greater number of difficulties, obscure errors, and buggy or non-standard compilers.

Despite these problems, C++ still managed to shine and eventually the features began to rub off on me. Without a doubt, the three most important features of the language were encapsulation, inheritence, and polymorphism. Using these new capabilities programmers everywhere found new ways to leak memory, produce bugs, and blot their code; moreover, and somewhat less sarcastic, they also found new design patterns, complex adaptive software architectures, and spiffy new data structures that just made everything taste better. Where would the software world be without indecipherable meta-programming techniques and obscure job interview questions? Sorry, more sarcasm coming through.

Borland’s Turbo C++ was a fast 16-bit compiler created by Borland and was essentially a cheaper and less functional version of C++ Builder. Comparing it against cheaper tools at the time, it had many of the same capabilities as Microsoft’s QuickC compiler and provided a few new ones too. Most imporatantly, it could compile C++ and C source code while QuickC could only handle the latter. Like QuickC, it had a built-in debugger, but Turbo C++ was more feature rich than Microsoft’s incarnation. To be fair, Microsoft had a C++ compiler too, and it would not be a stretch to say it was one of the most popular compilers in the industry; however, it was also not the cheapest compiler to be had, and the Microsoft version didn’t support a lot of the C++ standard until recently but exactly which standard and to what degree is a hot topic which I won’t dive into here. Borland provided an implementation the AT&T C++ 2.1 standard with their product.

I remember the Turbo C++ compiler having more support for templates than most of the competition at the time. According to Wikipedia, the Borland product line was instrumental in the development of the Standard Template Library. I was wary of templates when I first encountered them back in the 1992. The problem was mostly due to documentation and compatibility. Many C++ books never even touched upon templates since few of the major compilers, including Microsoft’s, didn’t support them or supported them to such an extent to render them unusable. Professional programmers probably weren’t pushing for the technology either since it was so haphazard. Eventually this all led to poor interoperability between compilers even on the same operating system.

One major limitation with Borland’s product at the time was the inability to produce 32-bit executables. This feature was necessary if your program needed to use 32-bit protected mode for access to extended or expanded memory (there was a 286 16-bit protected mode available in Turbo C++ but it didn’t interest me). Because of this unfortunate limitation, I didn’t use the program for as long as I probably would have, and opted to use the famous port of the GCC compiler from DJ Delorie instead called DJGPP.

The Borland C++ line of products is now distributed by Embarcadero Technologies, which acquired all of Borland’s compiler tools with the purchase of its CodeGear division in 2008.

Categories: DOS, Programming

No Comments »

Microsoft Quick C Compiler

December 21, 2010 When I first came in contact with this compiler, I was just starting high school and eager for the challenges ahead (except for the material which didn’t interest me — basically non-science courses). When I went to pick the courses for the year, I noticed a couple which taught computer programming. The first course, which was a pre-requisite for the second, taught BASIC while the second course taught C programming. At this point in my life, I was an old hand at BASIC, so I basically breezed through first programme. The second course intrigued me much more. I was familiar with C programming from my relatively brief experience with the Amiga, but I had a lot left to learn. My high school didn’t use the Lattice C compiler, but a Microsoft C compiler instead. I located the gentleman who taught the course and he pointed me to a book called Microsoft C Programming for the PC written by Robert LaFore and the Microsoft QuickC Compiler software. I had a job delivering newspapers at the time, so I could just barely afford the book using salary and tips saved from two weeks doing hard time ($50 at the time), but the compiler was just too expensive. So I did what any highly effective teenager would do, basically I dropped really big hints around the house (including the location and price of the compiler package I wanted) until my parents purchased a copy for me on my birthday.

When I first came in contact with this compiler, I was just starting high school and eager for the challenges ahead (except for the material which didn’t interest me — basically non-science courses). When I went to pick the courses for the year, I noticed a couple which taught computer programming. The first course, which was a pre-requisite for the second, taught BASIC while the second course taught C programming. At this point in my life, I was an old hand at BASIC, so I basically breezed through first programme. The second course intrigued me much more. I was familiar with C programming from my relatively brief experience with the Amiga, but I had a lot left to learn. My high school didn’t use the Lattice C compiler, but a Microsoft C compiler instead. I located the gentleman who taught the course and he pointed me to a book called Microsoft C Programming for the PC written by Robert LaFore and the Microsoft QuickC Compiler software. I had a job delivering newspapers at the time, so I could just barely afford the book using salary and tips saved from two weeks doing hard time ($50 at the time), but the compiler was just too expensive. So I did what any highly effective teenager would do, basically I dropped really big hints around the house (including the location and price of the compiler package I wanted) until my parents purchased a copy for me on my birthday.

There are a number of differences between the BASIC and C programming languages. One of the more obscure differences lies in how the C programming language deals with special variables that can hold memory addresses. These variables are called pointers and are an integral part of the syntax and functionality of the language. BASIC did have a few special functions which could accept and address locations in memory – I’m thinking of the CALL and USR functions specifically, although there were others. However, a variable holding an address was the same as one holding any other number since BASIC lacked the concept of strong types. The grammar of the C language is also much more complex than BASIC; it had special characters and symbols to express program scope and perform unary operations, which introduced me to the concept of coding style. When a programmer first learns a particular style of coding, it can turn into a religion, but I hadn’t really been exposed to the language long enough to form an opinion. That would come later, and then be summarily discarded once I had more experience.

There were libraries of all sorts which provided functionality for working with strings, math functions, standard input and output, file functions, and so on. At the time, I thought C’s handling of strings (character data) was incredibly obtuse. Basically, I thought the need to manage memory was a complete nuisance. BASIC never required me to free strings after I had declared them, it just took care of it for me under the hood. Despite the coddling I received, I was familiar with the concept of array allocations since even BASIC had the DIM command which dimensioned array containers; re-allocation was also somewhat familiar because of REDIM. However, there were many more functions and parameters in C related to memory management, and I just thought the whole bloody thing was a real mess. The differences between heap and stack memory confused me for a while.

There were many features of the language and compiler I did enjoy, of course. Smaller and snappier programs were a huge benefit to the somewhat sluggish software produced by the QuickBASIC compiler and the BASIC interpreter. The compiled C programs didn’t have dependencies on any run-time libraries either, even though there was probably a way to statically link the QuickBASIC modules together. Pointers were powerful and were loads of fun to use in your programs, especially once I learned the addresses for video memory which introduced me to concepts like double buffering when I began learning about animation. Writing directly to video memory sounds pretty trivial to me right now, but it was so intoxicating at the time. I was more involved in game programming by then and these techniques allowed me to expand into areas I never considered. It allowed for flicker-free animation, lightning fast ASCII/ANSI window renderings via my custom text windowing library, and special off-screen manipulations that allowed me to easily zip buffers around on the screen. A number of interesting text rendering concepts came from a book entitled Teach Yourself Advanced C in 21 Days by Bradley L. Jones, which is still worth reading to this day.

At around this time, I also started to learn about serial and network communications. The latter didn’t happen until my last year at high school. Basically, I wanted to learn how to get my computers to talk to one another. It all started when I became enchanted by the id Software game called DOOM, which allowed you to network a few machines together and play against each other in a vicious winner takes all death-match style combat. Incidentally, games like Doom, Wolfenstein 3D, or Blake Stone: Aliens of Gold led me down another long-winding path: 3D graphics, but that didn’t happen until a few months later. Again, the book store came to the rescue by providing me with a book entitled C Programmer’s Guide to Serial Communications by Joe Campbell. I was somewhat familiar with programming simple software which could use a MODEM for communication, since BASIC supported this functionality through the OPEN function, but I knew very little about the specifics. Once I dug into the first few chapters, I knew that was all going to change.

Categories: DOS, Programming, Reflections, Software

No Comments »