Archive for the 'Reflections' category

Retro Computing and Space

April 21, 2023Space is always one your most valuable assets in your home. Sufficient space can allow you to build and manage large projects, showcase large collections, and provide you with a host of entertainment options. Anyone who has a home, be it an apartment, small house, or a large suburban palace, understands how quickly that space can become occupied. I am always fighting this problem. I need to periodically optimize my project space by selling, reorganizing, and discarding household items. My wife and I have a long standing agreement that most of our house is off limits for my own projects. That doesn’t mean we can’t use that space for projects that we both want to build, but it generally means my retro computing hardware, maker equipment, and other toys are confined to the basement. This suits me fine most of the time since our house is fairly spacious. In some cases, however, I need to think outside the box if I want to setup some equipment, or showcase a collection, when space is at a premium.

Enter my fledgling Amiga hobby. Despite owning an Amiga 1000 computer for several years, I have had little opportunity to use it. I have used emulation in place of the real thing, but in the last six months, I have really had an itch to use the real hardware. As is the case for many older computers, the complete machine takes up a lot of space, and that space is currently being used by other machines. Even though I have painstakingly eliminated several pieces of equipment over the years, through attrition or acts of God (freak electrical storms), I am still fighting for each additional foot of space I manage to free up. You can see lots of YouTubers who are single men, or married men with no kids, or simply folks with lots of free space, having numerous machines setup, fully kitted out, and ready to go at a moments notice. I am not in that situation. As a result, I decided to take a different tactic with my Amiga hardware. Since I now own an Amiga 1000, Amiga 500, and an Amiga FPGA device called a Minimig, I knew it was time to find a solution…

Categories: Reflections

No Comments »

Playing Deadlight on the PC

April 1, 2020I have been enjoying Deadlight on the PC. It’s a beautiful little action platformer with relatively simple mechanics, albeit with a frustrating mechanism when shooting and loading a pistol. It’s simple to shoot, but difficult to get the aim right. Luckily, most targets are large and relatively easy to hit. I hope there isn’t an upcoming situation where I need to shoot something in a hurry…

While reading some comments about the game the other day, it seems a lot of people had difficulty around the helicopter scene. Based on the comments, they seemed to found the difficulty spike to be out of the blue, to the point where the shock of it caused many of them to stop playing the game cold turkey. They just aborted it, claiming the scenario was unreasonably challenging. The thing is, I found it tricky too, but only for a short while. I needed to try it several times before I finished it. The point is that I did get through it, even when I screwed up one section royally and thought for sure I was a goner. Just to prove it wasn’t a fluke, I tried it again and completed it with no issues.

The comments in the thread seem a little too caustic, given the games average difficulty level. I am left wondering about the psychology at play here. If a game has established an easy to moderate level of difficulty, and then it bumps the difficulty up a few notches out of the blue, what does that do to the player’s opinion of the game? Are they statistically more likely to drop the game at this point? Do the majority push through these hiccups? Are players nowadays just a tad spoiled in our expectations around the difficulty of a game, once our notions about a title have been established?

Categories: PC, Reflections, Steam

No Comments »

Kill Two Cacodemons, Once a Day

January 20, 2019I am ashamed to admit that I don’t play every game I own. Some of them haven’t even been fired up a single time on the machine for which they were destined to be played. Why is that?

Well, the easy answer is that I buy too many games for my current life style, and that is definitely true. I just don’t have as much free time as I had before I had kids. However, that answer doesn’t sit quite so well with me. It doesn’t feel like enough of a reason. I know that if I dedicated all of my free time towards the effort, I could play most, if not all, of those titles. It would take time, but I could slog through them. So then, why don’t I just do that?

The more complex answer is that I find many types of games I own to be mentally exhausting. I do tend to gravitate to the ones involving a lot of action and little else because of that. I just can’t bring myself to face the onslaught of constant decision making most of the time. I do play those other types of games, and I will enjoy them to varying degrees, but I find the exercise of unwinding a little harder than when playing a game like Doom. The fact that I play these games at all is ironic, because I often partake in electronic games to unwind.

With games like Doom, it comes down to a constant cycle of challenge, failure, and success. I have a job and home life where I am challenged with all sorts of problems on a daily basis. That reality is stressful, and I often feel like I make very little progress in the day to day. When I play a game like Doom, it is challenging and I do fail often, but I also succeed multiple times as well. I don’t get that kind of tight cycle in a game like Divine Divinity, the cycle is much slower. With Doom or perhaps a good platformer, those short, micro wins are fulfilling. Sure the mental onslaught of fear, anger, and worry while playing these sorts of titles is tiring eventually, but at the end of it I have usually made significant progress and that progression is enjoyable and ultimately, worth the price of admission.

Categories: Games, Reflections

No Comments »

8-bit Christmas

December 27, 2014I have recently finished the book entitled “8-bit Christmas” by Kevin Jakubowski. It was a very enjoyable read with many similarities to my childhood along with many differences; it was fun to read about another person’s perspective on that period. My brothers and I all wanted a Nintendo after we played one at a relative’s house during the Christmas break. The trouble being, of course, that we would have to wait until next Christmas to get one. Luckily, I had a birthday in July!

Categories: Reflections

No Comments »

Are we overemphasizing the importance of old games?

July 28, 2013I visit the site 1up.com fairly regularly. I enjoy their articles much more than other sites and I find they have a good perspective on retro-gaming content as well. Sometime last year, they wrote up a Top 100 Games of All Time list, which is nothing new and seems to have been done to death in the game journalism industry. Their angle was a little different, though, since they wrote up a full retrospective review on each game in the list, rather than just a short synopsis on why the game was important. One of the games reviewed was Wolfenstein  3D, of course, since that was a top 100 games of all time list. In the article, they explain that Wolfenstein 3D created all the basic elements of any FPS game made today, such as items being positioned in front of the player character, armour, tiered weaponed systems, and enemies which require different combat strategies to finish them off. I personally love this game and some of the knock-offs too, like Blake Stone: Aliens of Gold; I think a number of the core mechanics present in FPS games were popularized with that game. In the article, however, they imply that FPS games made today owe much to Wolf 3D and that it defined basically everything that an FPS could be:

“id Software set these standards back in 1992, and since then, very few FPSes have veered from these fundamental ideas.”

This assertion, I think, over emphasizes its importance a little too much. Yes, they do have some of the basic elements in common, much like every good cookie has flour and sugar as ingredients, and credit should be given to those who breached the market first as well as the inventiveness of the individuals at the time. However, saying that today’s FPSes have veered very little since then, is like saying action movies continue to have action in them, but are really just boring rip offs of the very first action film, The Great Train Robbery made in 1903. Once a genre is created, by definition, there will be situations and themes in common with other products in that category. But to say that very little has been introduced to expand the FPS genre definition since then is to ignore everything that makes them different.

Since I am a programmer by trade and one who specializes in game science and computer graphics, I know how technically different games made today are from those made in 1992, and for technical reasons alone, they should rarely (if ever) be compared to earlier titles because to do so diminishes the achievement of today’s games. There is so much more technical effort, detail, and expense in making a game like Call of Duty, than Wolfenstein 3D even when you account for shifts in the technology gap. The latter was created by fewer than five people and according to Wikipedia:

“The game’s development began in late 1991… and was released for DOS in May, 1992.”, source.

There’s not a lot of sophistication that can be placed into a game in six months; the folks at id Software were shooting to fill a void in the marketplace, much like Apple did with the iPod. They depended heavily on being the first to market. Sure, there was a certain amount of inventiveness needed for both products to be successful and having a Nazi theme surely helped to popularize the title, but usually the bar tends to be much lower since you are the first to arrive in the market. For these kinds of products, timing is just as important as any other attribute. You don’t need to be the best; in fact, various markets are filled with examples of better products which have been released only a couple of months later that do not survive, simply because they were not first to fill the void.

Whereas, games like Call of Duty or Mass Effect take years of development on teams of 50-150 people, and typically have features like:

- Complex stories and nuances of game play;

- Interesting characters and custom look and feel to main protagonists;

- Large variety of multi-faceted equipment and combat scenarios;

- Full orchestral music and top of the line sound effects;

- Physics based environments and realistic interactive environments;

- Sophisticated level of detail within very large environments;

- AI driven characters which can act cooperatively or competitively in a variety of environments;

- Player created and user-driven of influenceable story arcs;

- Dynamic levels constructions which can be destroyed and collapsed at will;

- Multi-player scenarios with large groups of people around team based objectives;

- Facial animation and expression techniques which map to voice samples;

The list can go on from there, but you get the idea. Comparing one of these games to Wolfenstein 3D is like comparing a bicycle to something James Bond would drive and saying they are basically the same because they are both vehicles…

Categories: Reflections

No Comments »

Treasure your Console

June 26, 2013There are some people who are collectors, they love to hoard and collect things of all types, and others who git rid of things as soon as they stop using them. There are still others who shoot them on sight when playing Mass Effect 2. Unfortunately for video game consoles, they tend to be traded, given away, or discarded more often than not as soon as the next big thing comes along. The fact is that for many of us, it is simply not practical to hold onto every piece of hardware that crosses your path, even though we may want to hold on to it.

I enjoy playing games on the console hardware, but I also enjoy playing those same games through an emulator because some the console’s quirks can irritate me after a while. I tend to be a pragmatist when it comes to gaming nostalgia; generally speaking, if I don’t use or enjoy the console or the games available for it, then I will get rid of it. The reasons for doing this are almost always centred around clutter; I relish my hobby more if I can find and use the games I enjoy more easily. I almost never throw these systems away, although a friend of mine will attest to an unfortunate incident involving a fully functional Atari ST computer and monitor going into the trash in a moment of weakness, but these incidents are very rare thankfully.

These bits of retro hardware are full of little issues for the modern gamer. They don’t work very well on new televisions, they sometimes emit a musty smell, their hardware eventually deteriorates to the point where certain components need to be replaced, they lack save state functionality, and they often require bulky things like cartridges in order to do something useful. Some of these problems can be addressed: the console hardware can be modified to support new output technologies like component or RCA or VGA, the failing hardware components can be replaced with higher quality modern electronics, new cartridges are often available that use flash or SD Card storage and, of course, they can be cleaned.

However, I am of the opinion that certain pieces of gaming hardware and software are more than just the sum of their parts and are worth hanging onto because they represent something greater, even if they don’t work that well out of the storage box. We are part of a very large body of people in the world who have been brought up with iconic video game characters like Mario and Zelda in their daily lives. Many other elements of video game culture such as the people involved, the companies behind the platforms, the art, music and sound effects have permeated our society over the last five decades. Many of these systems have long and varied histories, most of which are very interesting from a cultural and technological standpoint. They are the reason you are using a smart phone right now, and they are also the reason you television has a web browser. There are numerous books published on video game history and technology which are available through various on-line retailers or perhaps directly through the author’s own web site, if you desire something fun and interesting to read. For those systems you found fun to play, try hanging onto them for a while, your kids may enjoy using them and you may certainly enjoy regaling them with interesting historical tidbits as they develop an appreciation for the devices they use.

Categories: Games, Reflections

No Comments »

Launch birthdays for the *Sega 32X and **Nintendo DS!

November 21, 2011* North American launch date in 1994 for the 32X

** North American launch date in 2004 for the Nintendo DS

The Sega 32X system was designed to breath new life into the aging MegaDrive and Genesis video game console, which was being ripped apart by Nintendo’s juggernaut the SNES. Sadly, it didn’t do so well and quickly evaporated from store shelves. Despite the poor reception, it’s still has a birthday, so make sure you play your favorite title tonight. It shouldn’t be too hard to pick one, given the limited selection of good titles for that platform. It’s probably for the best that we don’t mention the Sega CD either… well, despite my obvious misgivings about the system, I know it is near and dear to a more than a few people. So Sega fans, on this happy day, I salute you!

The Nintendo DS needs no introduction, since many of you have an incarnation of that device sitting in your house right now. With over 149 million units sold worldwide, I think it could be considered a success. Let’s do a little math, shall we?

149,000,000 x $150 + (149,000,000 x (6 * $40)) = $58,110,000,000

That’s an average cost of $150 dollars per unit, and each unit having an average of 6 games which sell for an average of $40 each. Let’s assume that 70% of that amount went to pay for everything from manufacturing, to licensing, to marketing and employee salaries. We’re still talking close to $20 billion in the clear.

So Nintendo Corporation, while you sit upon your mountain of money contemplating this special day, we the bottom dwellers of society raise our filthy hands in a formal salute!

Categories: Nintendo, Reflections, Sega

No Comments »

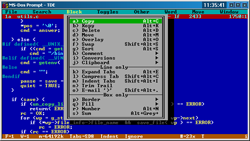

Thomson-Davis Editor (TDE)

November 6, 2011 The Thomson-Davis Editor, or TDE, was the first programmer’s editor to ever grace my hard drive. A programmer’s editor (PE) can be somewhat different than a typical text editor used for typing up README files or other user-level documents. A good PE will usually have a large assortment of features which makes the job of editing source code a little easier. Ironically, these editors can be some of the most obtuse software installed on a desktop system. To use them effectively, you must memorize several obscure key combinations and commands. Once committed to memory, these commands can be very powerful, allowing you to perform several complex editing or searching operations.

The Thomson-Davis Editor, or TDE, was the first programmer’s editor to ever grace my hard drive. A programmer’s editor (PE) can be somewhat different than a typical text editor used for typing up README files or other user-level documents. A good PE will usually have a large assortment of features which makes the job of editing source code a little easier. Ironically, these editors can be some of the most obtuse software installed on a desktop system. To use them effectively, you must memorize several obscure key combinations and commands. Once committed to memory, these commands can be very powerful, allowing you to perform several complex editing or searching operations.

As an aside, the usability goals for an editor within an IDE such as Borland C++, seem to be the exact opposite for the goals set out by the authors of many PEs. The editing within IDE is almost universally easy to use, while performing the same tasks within a PE requires practice and certain degree of research. Approaching this from the viewpoint of a novice looking into the dark world of a seasoned, and perhaps a little cynical UNIX-computer programmer, it would seem a little odd since the interface of an IDE can do so many different tasks (and thus have so many opportunities to botch things up), whereas a PE tends to be targeted to one task: editing source code. Surely, with fewer features, it must be easier to craft something which is even easier? It’s almost as if the author of a PE is trying to compensate for lack of development features by throwing in additional editing complexity. For example, how many standard forms of regular expression syntax does your PE support? If you answered anything other than “as many as I want since I can simply write a plug-in to support it,” then you’re not using the right editor.

I stumbled across TDE while browsing the download area for a local BBS. It included the source code for the program which was written in C, and it seemed to have a variety of interesting features; it certainly had more technical features than the QuickC editor I was using at the time. I guess I gravitated towards complexity at the time, and I quickly grew to love TDE and began modifying the source code to suit my needs. If I were to use the latest version of TDE today, I’m betting a lot of those hacked-in features would already be implemented.

The code for TDE was a great learning experience for me. It was organized fairly well so it made for relatively easy modifications and creative hacks. I lost the source code for my modified version during The Great Hard-drive Crash in the mid-1990’s. For some odd reason, I didn’t have a single back-up. It was particularly strange since most of my favourite projects were copied onto a floppy disk at some point. Frustratingly, I think I still have a working copy of the original 3.X code on disk! Anyway, the source code had excellent implementation details like fancy text and syntax handling, decorated windows, and reasonably tidy data structures. I don’t remember using any code from the editor in any future project, but I certainly took away a number of ideas. Doublely-linked lists may not seem like a big deal to me now, but I hadn’t read that many books at this point, so glimpses of real implementations using these data structures was very cool and inspiring. I found the windowing classes and structures particularly interesting since some of the windows were used for configuration, others for editing, and some for help. This was the first abstraction for a windowing system within an application I had run across. It was beautiful and gave me a lot of ideas for HoundDog – a future project which was used to track the contents of recordable media like floppies and CD-ROMs, so that I could find that one file or project quickly without needing to use those floppy labels.

Categories: PC, Reflections

No Comments »

The QuickBASIC and the 0xDEAD

November 2, 2011QuickBASIC is very similar to QBasic since the latter is just a stripped down version of the former. It had a few functions QBasic did not have — such as the ability to compile programs and libraries, allowed for more than one module, and could create programs which were larger in size. It also had this annoying bug where the memory management model in the interpreter was different than the one used by the compiler; this was a problem when your program worked in the debugger, which used the interpreter, but not when the program was actually compiled and run from the shell.

QBasic shipped with MS-DOS 5 and consisted of only an interpreter, meaning it translated small chunks of code into machine code as they were executed (I believe it did cache the translated portions, so it wouldn’t need to translate them again). QuickBASIC was more advanced and had a compiler as well as an interpreter, which allowed it to translate and optimize the machine code it generated before you ran it. It had only one dependency during compilation, and that was the QB.LIB library. Using the QuickBASIC IDE or the command line, you could compile multiple modules into a single target which could be a library or an executable program.

QuickBASIC was handy for prototyping and demos. I didn’t need to do many of these for my own projects; although, I did a lot of experimentation with network interrupts before porting those routines and programs to C. QuickBASIC was mainly used for projects and demos at school and eventually college; many of them were also written in C due to a few course requirements.

While in college, I had written a chat application over a local area network for DOS before the concept became popular. It was using the SPX protocol for some parts, and the IPX protocol for others. It only supported peer-to-peer communication and only with one other person, bit it served as a demo for simple network communication, and many of the students used it quite regularly.

In other programs, I was using Novell Netware interrupts for communication, broadcasts and client machine discovery. I loved it and found coding these applications fun and exciting; my Ralph Brown textbook was well used during this time. I think my interest in programming these network applications stemmed from my days playing DOOM over similar networks. Basically, I just loved lot the potential for interactivity, which is a tad ironic since I was fairly quiet programmer back then. A few of my friends became interested in the software I was writing and one day I decided to play a few practical jokes on my fellow students. I created a custom program for sending messages via the Netware API. These messages could be broadcast to every machine on the network, a group of machines, or a specific machine. When a machine received one of these messages, it would display a dialog and show the user a message. Pretty simple concept, but many people were not aware their machines could even do that, or what it meant to even be on a network.

Simply using the network software which came with Netware wasn’t an option, since the message dialogs produced by those tools contained the name or address of the machine from which the message originated. According to Steve Wozniak, co-founder of Apple Computers and well-known prankster, the most important element to any gag is not to get caught. We needed to customize the message being sent so that it included only the details we wanted to send, and the only way to do that was to write a custom program. This was fairly easy since I had been writing software on Netware for several months, and when the packets were sent off through the network, our machine name was carefully omitted and our address forged.

We used the program to send funny or confusing messages to some of the students. No profanity or crude humour, mind you… well nothing too crude anyway. My goal was always to get the user to believe what the machine was telling them. I had convinced one student her computer didn’t like the way she typed; her keystrokes were always too hard or fast for the computer’s liking. Several computers were being used by students to view pornography, so I did my best to make them feel uncomfortable in a public setting. Many of them believed they were being watched by the network administrators, which could have been true, although network monitoring software was much more limited at this time). Anyway, these people quickly shuffled out of the room red-faced, hoping not to get caught on their way out. I still get a chuckle out of it even now, when I think about it.

In a way, I am kind of saddened by the complexity of today’s operating systems. Trying to write the same software on modern machines would be extraordinarily more difficult today, mostly because of new operating system and network security features. They just aren’t managed in the same way anymore, so a programmer’s ability to exploit a network so directly has disappeared. I don’t particularly disapprove, it’s just not as easy to have a little fun.

Categories: PC, Reflections

No Comments »

Microsoft Quick C Compiler

December 21, 2010 When I first came in contact with this compiler, I was just starting high school and eager for the challenges ahead (except for the material which didn’t interest me — basically non-science courses). When I went to pick the courses for the year, I noticed a couple which taught computer programming. The first course, which was a pre-requisite for the second, taught BASIC while the second course taught C programming. At this point in my life, I was an old hand at BASIC, so I basically breezed through first programme. The second course intrigued me much more. I was familiar with C programming from my relatively brief experience with the Amiga, but I had a lot left to learn. My high school didn’t use the Lattice C compiler, but a Microsoft C compiler instead. I located the gentleman who taught the course and he pointed me to a book called Microsoft C Programming for the PC written by Robert LaFore and the Microsoft QuickC Compiler software. I had a job delivering newspapers at the time, so I could just barely afford the book using salary and tips saved from two weeks doing hard time ($50 at the time), but the compiler was just too expensive. So I did what any highly effective teenager would do, basically I dropped really big hints around the house (including the location and price of the compiler package I wanted) until my parents purchased a copy for me on my birthday.

When I first came in contact with this compiler, I was just starting high school and eager for the challenges ahead (except for the material which didn’t interest me — basically non-science courses). When I went to pick the courses for the year, I noticed a couple which taught computer programming. The first course, which was a pre-requisite for the second, taught BASIC while the second course taught C programming. At this point in my life, I was an old hand at BASIC, so I basically breezed through first programme. The second course intrigued me much more. I was familiar with C programming from my relatively brief experience with the Amiga, but I had a lot left to learn. My high school didn’t use the Lattice C compiler, but a Microsoft C compiler instead. I located the gentleman who taught the course and he pointed me to a book called Microsoft C Programming for the PC written by Robert LaFore and the Microsoft QuickC Compiler software. I had a job delivering newspapers at the time, so I could just barely afford the book using salary and tips saved from two weeks doing hard time ($50 at the time), but the compiler was just too expensive. So I did what any highly effective teenager would do, basically I dropped really big hints around the house (including the location and price of the compiler package I wanted) until my parents purchased a copy for me on my birthday.

There are a number of differences between the BASIC and C programming languages. One of the more obscure differences lies in how the C programming language deals with special variables that can hold memory addresses. These variables are called pointers and are an integral part of the syntax and functionality of the language. BASIC did have a few special functions which could accept and address locations in memory – I’m thinking of the CALL and USR functions specifically, although there were others. However, a variable holding an address was the same as one holding any other number since BASIC lacked the concept of strong types. The grammar of the C language is also much more complex than BASIC; it had special characters and symbols to express program scope and perform unary operations, which introduced me to the concept of coding style. When a programmer first learns a particular style of coding, it can turn into a religion, but I hadn’t really been exposed to the language long enough to form an opinion. That would come later, and then be summarily discarded once I had more experience.

There were libraries of all sorts which provided functionality for working with strings, math functions, standard input and output, file functions, and so on. At the time, I thought C’s handling of strings (character data) was incredibly obtuse. Basically, I thought the need to manage memory was a complete nuisance. BASIC never required me to free strings after I had declared them, it just took care of it for me under the hood. Despite the coddling I received, I was familiar with the concept of array allocations since even BASIC had the DIM command which dimensioned array containers; re-allocation was also somewhat familiar because of REDIM. However, there were many more functions and parameters in C related to memory management, and I just thought the whole bloody thing was a real mess. The differences between heap and stack memory confused me for a while.

There were many features of the language and compiler I did enjoy, of course. Smaller and snappier programs were a huge benefit to the somewhat sluggish software produced by the QuickBASIC compiler and the BASIC interpreter. The compiled C programs didn’t have dependencies on any run-time libraries either, even though there was probably a way to statically link the QuickBASIC modules together. Pointers were powerful and were loads of fun to use in your programs, especially once I learned the addresses for video memory which introduced me to concepts like double buffering when I began learning about animation. Writing directly to video memory sounds pretty trivial to me right now, but it was so intoxicating at the time. I was more involved in game programming by then and these techniques allowed me to expand into areas I never considered. It allowed for flicker-free animation, lightning fast ASCII/ANSI window renderings via my custom text windowing library, and special off-screen manipulations that allowed me to easily zip buffers around on the screen. A number of interesting text rendering concepts came from a book entitled Teach Yourself Advanced C in 21 Days by Bradley L. Jones, which is still worth reading to this day.

At around this time, I also started to learn about serial and network communications. The latter didn’t happen until my last year at high school. Basically, I wanted to learn how to get my computers to talk to one another. It all started when I became enchanted by the id Software game called DOOM, which allowed you to network a few machines together and play against each other in a vicious winner takes all death-match style combat. Incidentally, games like Doom, Wolfenstein 3D, or Blake Stone: Aliens of Gold led me down another long-winding path: 3D graphics, but that didn’t happen until a few months later. Again, the book store came to the rescue by providing me with a book entitled C Programmer’s Guide to Serial Communications by Joe Campbell. I was somewhat familiar with programming simple software which could use a MODEM for communication, since BASIC supported this functionality through the OPEN function, but I knew very little about the specifics. Once I dug into the first few chapters, I knew that was all going to change.

Categories: DOS, Programming, Reflections, Software

No Comments »